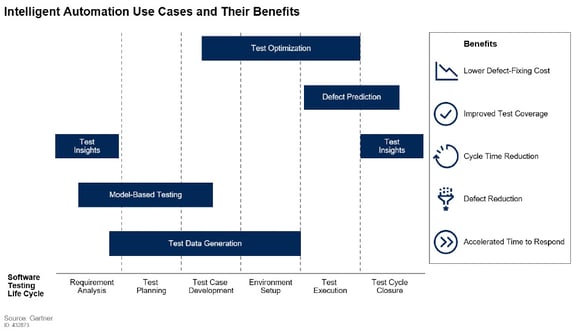

Gartner® report: Optimize Application Testing Quality and Speed With Embedded Intelligent Automation (IA) Services

According to Gartner, "Sourcing, procurement and vendor management leaders (SPVM) who wish to optimize the quality and speed of application testing as part of their IT services and solutions strategy and selection should:

- Increase application testing agility by working with application leaders to explore IA use cases, such as test optimization, defect prediction, model-based testing, test data generation and test insights.

- Optimize the costs of application testing services by asking the evaluation questions provided. Require service providers to document how they will incorporate IA into application testing services, for both new proposals and existing contracts.

- Evaluate the IA strategies of your application service providers by holding biannual vendor briefings to understand their IA investment strategies and the partnerships and tools they are working with. Initiate a proof of concept (POC) where there is opportunity for the providers to test their investments for a win-win outcome."

*Available for a limited time only

6 Reasons You Should Be Doing Behavior-Driven GUI Testing

When it comes to behavior-driven development (BDD), many are asking themselves questions like:

- Is BDD just Yet Another Hype, or is there something substantial?

- Is it relevant to my own work?

- Why should I consider a behavior-driven approach to test development?

The answer to all three questions is a clear and resounding ‘Yes!’. A behavior-driven approach to GUI test automation using Squish brings major benefits, spanning from being able to test earlier and involving more people in the process, over concrete advantages in implementation such as better modularization and tooling support all the way to more sophisticated ways for test result analysis.

How to Create Automated GUI Tests for Your Embedded Application

This webinar introduces you to the wonderful world of GUI test automation. What is GUI test automation, what are its benefits, and where is it used? We answer all these questions and guide you through setting up a testing environment for your embedded target and creating your first automated test. You'll also learn about different alternatives of automated testing, such as the image-based or object-based variants, and their individual strengths.