Physical AI isn't Built on Software Quality Shortcuts

Written by:

Annika Packalén

Product Marketing Lead – Software Quality Solutions, Qt Group

TL;DR

Key Highlights

-

The verification gap is real. Organizations are deploying AI systems into the physical world using quality infrastructure designed for a previous era. The regulations haven't changed. What's changed is the gap between what teams are building and what their verification processes can actually prove.

-

The stakes compound. Physical AI systems (robots, autonomous vehicles, surgical robotics) move AI from screens into environments where failures have immediate physical consequences. Defects found post-release cost 100-1000x more to fix. For Physical AI, multiply that by recalls, regulatory action, and liability exposure.

-

Current tools can't see the problem. A significant portion of safety-critical AI code runs on specialized hardware (GPUs, accelerators) that traditional verification tools can't process. When your perception stack executes on hardware your tools ignore, you're verifying a fraction of what actually runs.

-

Five key capabilities from the software quality and compliance perspective will separate leaders. Physical AI demands architectural integrity across computational boundaries, verification that covers specialized hardware, automated compliance evidence, validated human-AI interfaces, and virtual validation before physical deployment. Most organizations lack at least three of these..

-

Quality infrastructure becomes the differentiator. The companies mastering Physical AI aren't treating quality as a barrier. They're building it as the platform that makes safe deployment possible.

-1-1.png?width=850&name=CUDA%20PR%20social%20image%20V2%20(2)-1-1.png)

Physical AI isn't Built on Software Quality Shortcuts

Every organization has quality gaps in their development process. Until now, those gaps were expensive but manageable. Software failures meant user complaints, bug fixes, maybe reputation damage. In safety-critical industries, the consequences could extend to recalls and regulatory action.

Physical AI changes the stakes. When AI moves from screens into surgical robotics, autonomous vehicles, and industrial systems operating alongside humans, quality shortcuts stop being technical debt. They become safety incidents.

The organizations building Physical AI today are discovering this firsthand. The ones succeeding already understand: the quality and verification infrastructure that was "good enough" for digital systems becomes catastrophically insufficient the moment AI enters the physical world.

The gap isn't regulatory.

The regulatory requirements and standards haven't fundamentally changed. The gap is between what teams are building and what their quality processes can actually verify.

Why Verification Gaps Matter Now

Physical AI isn't another technology trend. It's the test of every assumption you've made about acceptable software quality.

AI in robotics, autonomous vehicles, and industrial systems is moving from pilot programs to production deployment.

The growth is real. The foundation it's being built on is shaky.

Physical AI operates under constraints digital AI never faced. The physical world doesn't accept patches. A recommendation algorithm fails, you push a fix. A Physical AI system fails, someone gets hurt. You don't get a second chance to validate after the forklift hits someone or the surgical system makes a wrong cut.

This is why verification before deployment becomes non-negotiable. Gartner's research is explicit: virtual development and validation are the only viable approaches for safe, reliable Physical AI systems. You prove safety in simulation and testing before the system ever touches the physical world.

But here's the problem. Most organizations are building these complex systems on verification infrastructure and quality processes designed for simpler ones.

The physical world doesn't give you do-overs. You need verification infrastructure that can prove safety before deployment, because you can't patch your way out of a physical failure.

That's the verification gap. And it's widening.

What is Physical AI

According to NVIDIA, Physical AI is the practice of designing autonomous physical AI systems that directly interact with and operate within the physical world.

These autonomous systems manipulate objects, move through space, or sense physical phenomena.

Practical examples: warehouse robots, autonomous vehicles, surgical robotics systems, industrial automation equipment, drones for infrastructure inspection.

Gartner designated Physical AI as a Top Strategic Technology Trend for 2026, mentioning and using the litmus test:

"If you can throw it out the window, it's physical AI."

Pattern Lessons from The Automotive Industry

The automotive industry is a good example of your future right now. It demonstrates what happens when innovation outpaces verification infrastructure.

According to Recall Masters' State of Recalls report over 28 million vehicles were recalled in 2024. Of these, 174 campaigns affecting 13.8 million vehicles were linked specifically to software and electronic system failures.

The global automotive cybersecurity market, valued at USD 3.5 billion in 2024, is projected to grow at 11.6% CAGR through 2034 (GM Insights). That growth represents billions being spent to retrofit security into architectures that should have been secure by design.

We can all admit that this is not careless engineering. These are teams building autonomous capabilities on architectural foundations that weren't designed for continuous software updates, let alone full autonomy.

It's a collision of two (or more) different eras.

But here's a pattern you need to recognize:

Organizations are innovating and building AI capabilities on existing quality processes "from the past", designed for a previous era. Those processes couldn't scale. Now organizations are spending more on fixing failures than they should, and will continue to do so if the focus doesn't shift back into prioritizing quality infrastructure and AI risk management.

Defects found post-release cost 100 to 1000 times more to fix than those caught during development. When those defects involve Physical AI systems, multiply that by regulatory sanctions, recalls, and liability costs.

The recent Tesla Autopilot crash verdict serves as a warning for every organization across all safety-critical sectors. The jury found significant responsibility on the manufacturer's side.

The same pattern is forming across industries building Physical AI: quality gaps that seemed acceptable in traditional software become legal exposure when AI operates in the physical world.

The Leader’s Wake-Up Call to GPU Functional Safety

If your best engineers are drowning in manual verification, if compliance has become your bottleneck, if you're one GPU bug away from disaster; this guide is your roadmap out.

.png?width=850&name=CUDA%20PR%20social%20image%20V2%20(1).png)

Where Current AI Testing Tools and Quality Infrastructures Break

Now add AI coding assistants to this equation. Industry research on AI testing trends confirms that AI-generated code quality has significantly higher defect rates than human-written code. The majority of samples contain logical or security flaws, while also a majority of developers routinely rewrite or refactor AI-generated code before it's production-ready.

The CrowdStrike incident in July 2024 showed how quality failures cascade into safety crises. A configuration file missing a single array bounds check crashed 8.5 million computers globally. Airlines and airports were impacted. Emergency services failed. Hospitals canceled surgeries. The economic damage exceeded $10 billion.

This is the state of the foundation we're relying on to build systems with full autonomy.

.png?width=850&name=Physical%20AI%20-%20blog%20(1).png)

What breaks when Physical AI meets current quality infrastructure:

Most software quality and AI testing tools weren't designed for the parallel processing architectures that power AI perception stacks. Traditional testing frameworks assume deterministic behavior in systems that are probabilistic by nature. Compliance workflows depend on manual documentation that can't scale to continuous deployment.

The quality testing and verification solutions stop at language boundaries, missing the critical interfaces where AI models coordinate with control systems.

A significant portion of safety-critical code in modern AI systems runs on specialized hardware that these traditional quality testing tools can't process. When perception stacks, sensor fusion algorithms, and decision-making logic execute on GPUs or other accelerators, the code doing the heavy lifting exists in a verification blind spot.

When an incident occurs, and suitable quality solutions exist to meet required safety standards, incomplete AI validation and verification is a liability risk, instead of being solely a technical limitation.

What Physical AI Requires From Software Quality Infrastructure

Gartner's research reveals something worth understanding: the most successful Physical AI systems prioritize collaboration over full autonomy. According to their "Top Strategic Technology Trends for 2026: Physical AI" report, the market will be defined by intelligent machines that augment human skills by treating humans as partners, not problems to be solved.

Every interface where operators monitor AI behavior becomes a safety-critical surface. Every touchpoint where humans and machines collaborate requires systematic verification and testing. The human in the loop still is part of the safety architecture.

Still, Physical AI requires a quality infrastructure that can do five things your current setup probably can't:

1. Prove architectural integrity across every computational boundary.

Physical AI systems span CPUs, GPUs, edge processors, and specialized accelerators coordinating perception, cognition, and actuation stacks. You cannot prove safety for systems you cannot trace. Architecture verification must follow implementation wherever it executes, detecting when systems drift from design before that drift becomes a safety incident.

2. Verify the code that actually runs your AI systems.

A brilliant architecture is worthless if built with faulty components. Your static analyzers and testing tools need to identify errors before they become safety failures. They need to verify safety-critical code against domain-specific guidelines and standards, regardless of where that code executes. Partial verification is not verification. If your tools can't properly process the code running your AI perception stack, you're basically flying blind.

3. Generate compliance evidence continuously and automatically.

Organizations mastering Physical AI don't treat compliance as an end gate. AI compliance automation means code coverage measures what actually runs, traceability connects every line back to safety requirements, and architectural governance solutions ensure Freedom from Interference and software segregation across computational boundaries.

4. Validate human-AI interfaces with the same rigor as AI models.

When operators monitor AI behavior through dashboards and control systems, those interfaces become safety-critical surfaces. Test that humans can actually understand what the AI is communicating and respond before incidents occur. Your human-in-the-loop design only functions as a safety architecture if the loop actually closes in time.

5. Enable virtual validation before physical deployment.

As Gartner emphasizes in "Adopting Physical AI for Real-World Use Cases", use simulation and digital twin platforms to test Physical AI systems before deploying them in live environments. Develop cross-functional teams with focus on governance, integration, and safety. Procure only solutions that comply with industry safety standards.

Food for Thought:

Physical AI introduces unique testing challenges

Traditional testing assumes predictable behavior, transparent logic, and deterministic outcomes. AI models are unpredictable and probabilistic.

They consume resources that embedded systems can't spare and create attack surfaces that safety standards don't address.

That's the infrastructure gap.

Overcoming these requires optimization techniques, specialized hardware, and rigorous testing and verification methodologies that your current infrastructure may lack.

The Great Quality Revolution – What Leading Organizations Are Doing Differently

If we keep things as they are, we're entering an era where groundbreaking innovation will collide with software quality collapse.

Here's what the status quo produces:

Engineering teams spending almost half or more time on validation and verification overhead than planned. Development velocity slowing with each feature addition as architectural complexity compounds. Quality becoming inconsistent because verification depth depends on which expert is available.

Innovation roadmaps stalling because leadership can't confidently defend new technology adoption to regulatory bodies. Projects consuming budgets without delivering ROI because the majority of resources go to manual solutions instead of building capabilities.

The status quo creates a compounding liability. Each autonomous system deployed on inadequate quality infrastructure represents accumulated risk.

Organizations leading the Physical AI era have stopped asking whether to invest in quality infrastructure. They're building it as the foundation that makes Physical AI and innovation possible.

They're implementing automated quality solutions, removing manual bottlenecks. They're building systems where minor updates don't trigger months-long re-validation cycles. When infrastructure handles safety and compliance automatically, engineering capacity focuses on building capabilities instead of fighting technical debt.

These organizations are mastering software quality infrastructure that lets them iterate on AI capabilities without compromising safety standards. They own their innovation roadmap. We are already in an era where systematic verification and quality will help you shape your market differentiator.

To gain this competitive edge, it requires turning software quality from the barrier that slows innovation into the platform that enables it.

The companies that master software quality infrastructure first will be the ones capable of safely deploying Physical AI.

Welcome to the new era where groundbreaking innovation will meet the great quality revolution.

Strategic Playbook

GPU Acceleration or Unmanageable Compliance Risk? The Leader’s Wake-Up Call to Functional Safety

This is your playbook for speed AND safety through a proper compliance verification infrastructure.

Your organization is building the future: AI-driven MedTech products, Autonomous Vehicles, and Industrial Robots – all with strict quality and safety requirements.

You're forced to choose between shipping fast or shipping safe, when the whole point was to have both.

If your best engineers are drowning in manual verification, if compliance has become your bottleneck, if you're one GPU bug away from disaster; this guide is your roadmap out.

Explore The Success Stories

Success Story Heartland.Data | Axivion

A leading software development company replaced its static analysis tools with Axivion Suite to reduce setup and maintenance time resulting in an high...

Read More

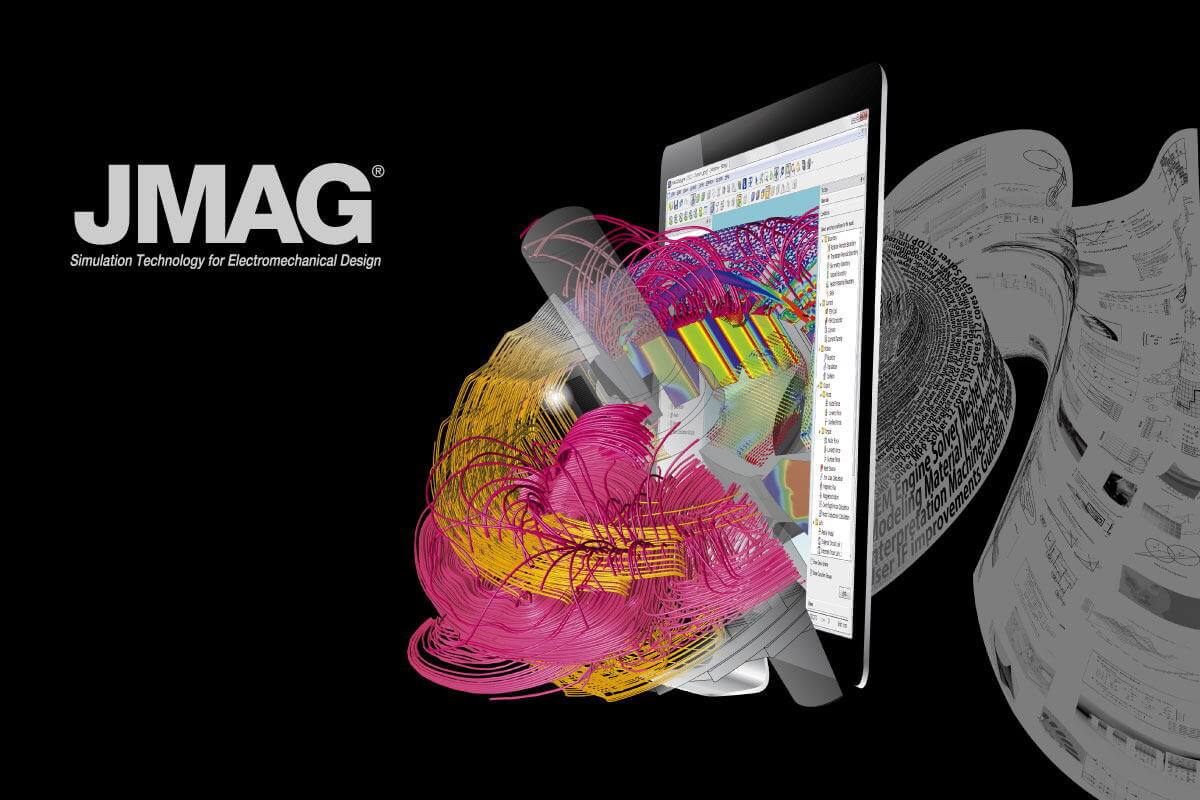

JSOL | Tested with Squish

JSOL Corporation enhances quality assurance in JMAG using Squish, automating 40% of test cases and reducing workload by 85%, ensuring efficient and re...

Read More

Success Story Schaeffler | Axivion

Architecture Verification of the Axivion Suite demonstrates Freedom from Interference in a Mixed ASIL Approach according to ISO 26262

Read More

Success Story ABB | Squish for Qt

Discover the winning combination of stability and convenience with Squish for Qt with the ABB Group. Elevate your testing process today!

Read More

Success Story Skyguide | Squish for Qt

Skyguide, headquartered in Geneva, Switzerland, is a company with a longstanding history of contribution to the development of Swiss aviation.

Read More