Which is the best LLM for prompting QML code (featuring DeepSeek v3)

February 05, 2025 by Peter Schneider | Comments

Claude 3.5 Sonnet is the best LLM to write QML code when prompted in English. If you want to know why we reached this conclusion, keep reading.

The QML100 Benchmark

Like the QML100FIM Benchmark we described in a previous blog post, we developed a benchmark for prompted code generation. These benchmarks help us guide our customers and adjust our fine-tuning work.

What tasks does the QML100 Benchmark contain?

The QML100 Benchmark contains 100 coding challenges in English, such as "Generate QML code for a button that indicates with a change of shadow around the button that it has been pressed.”

The first 50 tasks are focused on testing the breath of QML knowledge, covering tasks on most of the Qt Quick Controls. They include coding challenges for common components such as Buttons and less common controls such as MonthGrid.

The next 50 tasks cover typical UI framework applications such as "Create QML code for showing a tooltip when the user hovers with the mouse over a text input field.” or “Create QML code for a behavior of a smooth animation every time a rectangle's x position is changed.”

How challenging are the QML 100 Benchmark tasks?

QML100 includes simple tasks that all LLMs complete successfully such as: “Generate QML code for a Hello World application. The Hello World text shall be displayed in the middle of the window on a light grey background.”

QML100 also includes difficult tasks that all LLMs still fail, such as “Create QML code for a tree view displaying vegetables and things you can cook from them in their branch nodes. It shall be possible to expand and collapse branches through a mouse click.”

The benchmark includes a few tasks that ask it to fix a bug in existing code or add code to enhance the existing code.

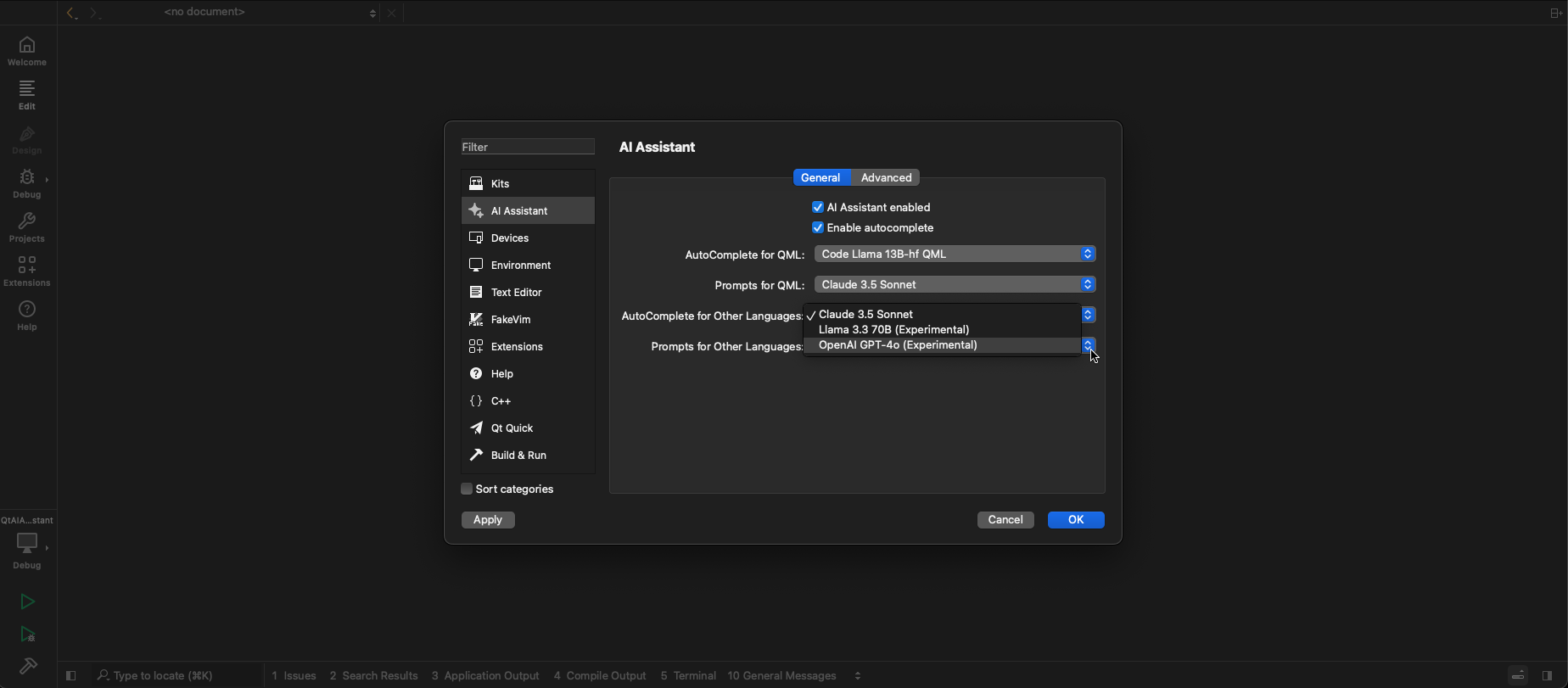

Image: LLM selection for Qt AI Assistant in Qt Creator

Few tasks measure the knowledge of the in-built capabilities of a Qt Quick Control, such as "Create QML code for a checkbox which is set to status checked by a touch interaction from a user." Many LLMs tend to write code for all parts of the instructions, creating code that fails the above task. Adding a handler ´onClicked: checked =!checked ` to a CheckBox will render the UI control useless.

Not included are multi-file challenges requiring the LLM to read context from multiple files, tasks related to Qt Quick3D, Qt Multimedia, Qt Charts, Qt PDF, Qt Positioning, or any other similar tasks.

When is the generated QML code considered correct?

The generated code is manually checked to run in Qt Creator 15 with the Qt 6.8.1 kit. It must not contain run-time errors and must solve the task. Redundant import version numbers or unnecessary additional code do not lead to disqualification (as long as they work).

We prompt an LLM only once. We don't do "best out of three" or something like that. Considering the probabilistic nature of LLMs, we can assume that benchmark responses are never precisely the same repeated Hence, I wouldn't read too much into an LLM being 1% better or worse than the next one.

Which LLMs were tested?

New LLMs pop up like mushrooms after a rainy day. We have tested the majority of mainstream LLMs. We did test LLMs which are available as a commercial cloud service and some which can be self-hosted free of royalties.

Which LLM generates the best code when prompted in English language?

The best royalty-free LLM for writing QML code is DeepSeek-V3, which has a score of 57% for successful code creation. The best overall LLM is Claude 3.5 Sonnet, with a success rate of 66%.

The performance of GitHub Copilot Chat in Visual Studio Code, which was 42% at the end of September 2024, is somewhat disappointing. We hope that this performance has improved by now. However, most of the results above are also from Q4 2024, and only DeekSeep-V3, Mistral Large 2.1, and OpenAI o1 have been benchmarked this year. StarCoder2 results are from Q2 2024.

The performance of StarCoder15B is a bit disappointing even when it has been trained on Qt documentation. However, we thought we should give it a try because it is the only model with transparent pre-training data.

Overall, considering both code completion as well prompt-based code generation skills, Claude 3.5 Sonnet is a serious option to consider for individuals and customers if it comes down purely to QML coding skills. However, other aspects such as cost-efficiency, IPR protection, and pre-training data transparency should play a role in selecting the best LLM for your software creation project.

Qt AI Assistant supports multiple LLM

Qt AI Assistant supports connections to Claude 3.5 Sonnet, Llama 3.3 70B, and GPT4o for prompt-based code generation and expert advice at the time of writing this blog post. If you want to know more about what the Qt AI Assistant can do for you, please visit our product pages.

If you need instructions on how to get started, please refer to our documentation.

(Updated: Mistral Large performance updated from Q1/2024 to Q1/2025 figures.)

(Updated: Claude 3.7 Sonnet performance added on 28th of February 2025)

Blog Topics:

Comments

Subscribe to our newsletter

Subscribe Newsletter

Try Qt 6.10 Now!

Download the latest release here: www.qt.io/download.

Qt 6.10 is now available, with new features and improvements for application developers and device creators.

We're Hiring

Check out all our open positions here and follow us on Instagram to see what it's like to be #QtPeople.