When you are part of a team running tens of thousands of tests across hundreds of configurations, you quickly realize that managing quality at scale isn’t just about test coverage, it’s about visibility.

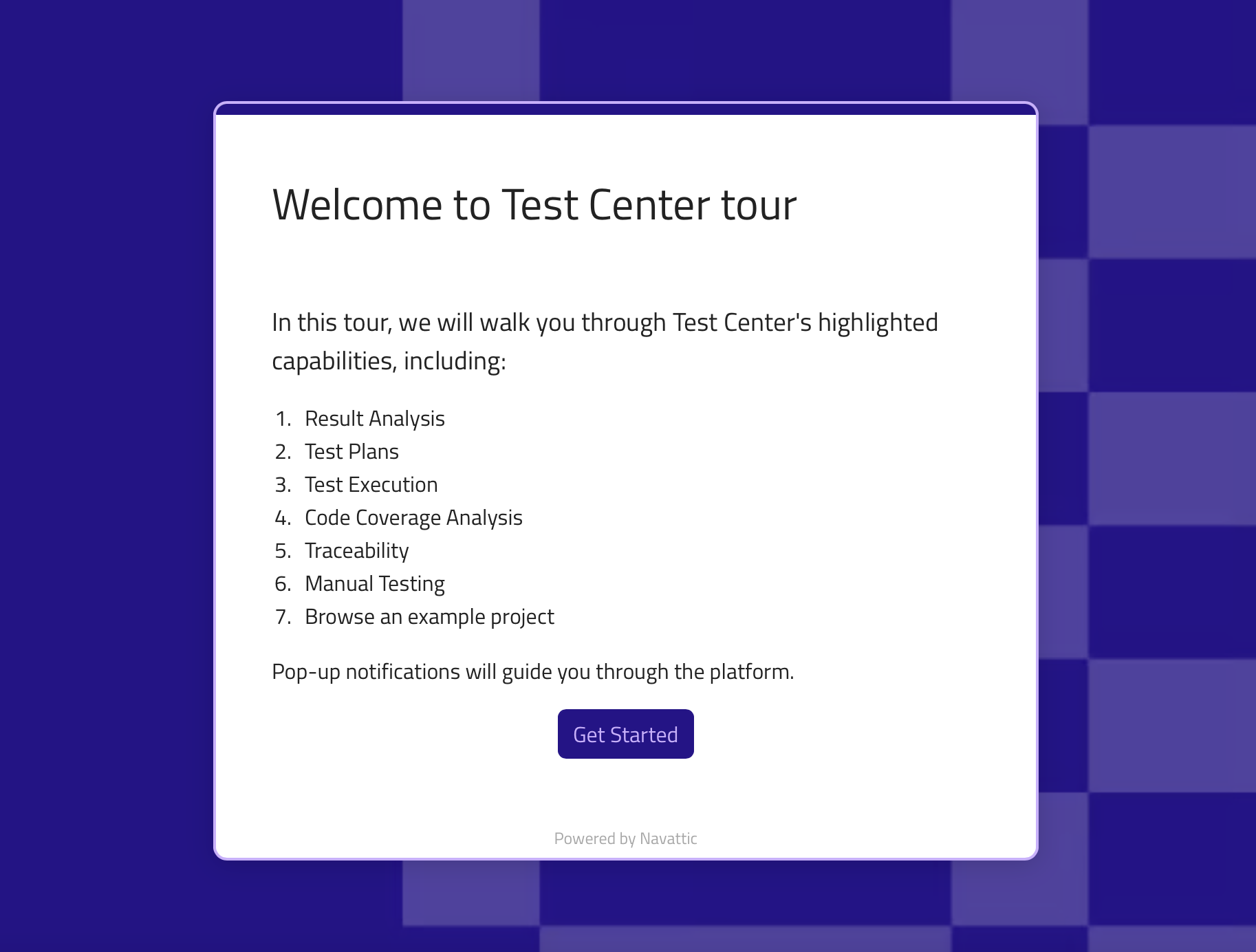

Over the years, I have seen QA teams struggle not with writing tests, but with tracking them. And honestly, the tools we traditionally use, manual PDFs, spreadsheets, siloed dashboards, just don’t scale. When your results are scattered across formats and platforms, it becomes nearly impossible to confidently trace failures or spot trends. That is why, in a recent session, I walked through how we’re using Test Center to bring order, visibility, and speed to our QA process. Here's what I shared.

Why Test Reporting Breaks Down at Scale

In large teams, it is not unusual to run 10,000+ tests across 150 configurations. These combinations might include multiple OS platforms (Windows, Linux, macOS), browser variants, and hardware differences.

Now imagine trying to manage all of that with PDFs and Excel sheets. I've seen teams doing exactly that and losing valuable time tracking down which test they failed, on which config, and why. The lack of real-time visibility creates friction, slows down releases, and turns audits into stressful scrambles.

For me, the goal of test reporting is not to prove QA exists, but to make test results a shared, accessible asset that supports faster, more confident releases.

One Unified View, No Matter the Platform

With Test Center, we centralized our test results in one place. Whether tests run on Windows, Linux, or macOS, they’re all pulled into a unified dashboard, organized by project, batch, and config. This is a game-changer.

Instead of bouncing between test environments or manually stitching reports together, our team can instantly:

- Filter by platform

- Spot trends

- Drill down into individual test runs

- Compare results across batches

You don't need to be a QA lead to make sense of the data: it’s intuitive and actionable right out of the box.

Debugging With Context: Logs, Screenshots, and Videos

One of the most valuable features I showed during the session was visual debugging.

I demoed a “Pytest and Selenium” test that failed because of a simple typo in the expected result. But instead of digging through logs, Test Center gave me instant context:

- A screenshot of the test at the exact moment it failed

- A step-by-step log of what led up to it

- And (if you’ve enabled it) a video recording of the entire run

This kind of clarity dramatically reduces the time it takes to diagnose and fix issues. You’re not playing a guessing game, but seeing issues.

Audit-Ready, Out of the Box

If you're in a regulated industry, or just preparing for internal reviews, you know how painful it is to assemble structured test reports manually.

With Test Center, we can generate audit-ready, exportable reports in a few clicks. They’re built with certification and compliance in mind, no formatting hacks needed.

Bringing Manual and Automated Testing Together

Automated testing is essential, but there are still plenty of cases where manual testing matters, like user acceptance, or rare edge cases that are tough to automate.

What I love about Test Center is that we can treat manual and automated tests as part of the same process. We use test plans to:

- Create reusable Test Plan

- Assign Manual Test executions to testers

- Track status across platforms

- Automatically incorporate automated test results in real-time

One of the messages I wanted to convey during the talk was that you should work both with manual and automated tests. I believe this is one of the waysyou can build trust in your test coverage and releases. As I mentioned:

"With Test Center, you can include both manual and automated tests in the same test plan. That way, you track results and progress together, giving a clearer picture of test coverage across your whole project."

Integrations That Just Work

Most QA teams have a complex stack framework like Pytest, Squish, Robot Framework; tools like Jira, TestRail, or Zephyr. We needed a reporting tool that could fit into our ecosystem, not fight it.

With Test Center’s Result Reporting API, we pushed results from multiple frameworks directly into the platform. So, during the demo I showed a custom Pytest plugin that:

- Captured assertions and screenshots on failure

- Streamed results into Test Center via HTTP

- Required zero manual uploads

Robot Framework worked just as smoothly. With a simple listener interface, we pushed results during runtime with a single command-line parameter—no test code changes required.

The philosophy is simple: test data shouldn’t be locked into individual tools. That’s why integrations with Jira, Polarion, TestRail, and Zephyr are so valuable. You can link test cases to tickets, reopen issues based on failures, or file bugs directly from the Test Center dashboard.

Final Thoughts: Visibility Over Volume

We're not struggling to write tests anymore. The real challenge is managing, understanding them, and acting on them.

With Test Center, we’ve been able to take the chaos out of QA reporting. We’ve turned test results into something the whole team can access, trust, and use to move faster. And for me, that’s what modern quality assurance is all about.

If you’re trying to scale your QA process and feel like you’re drowning in results, I strongly encourage you to take a look at Test Center. It changed the way we work.

Read the blog: Squish vs. Selenium for automated testing – What are the differences?

Final Thoughts: Visibility Over Volume

QA teams today aren’t struggling to write tests. They are struggling to track, debug, and report them at scale. The more configurations you support, the more important centralization, context, and automation become.

Jose Campos’ walkthrough of Test Center was a clear reminder: modern test reporting is about removing friction in debugging, planning, integrating, and communicating test results across your team.